Research Frontiers

Pioneering the future of Perception, Industry, and Embodied AI.

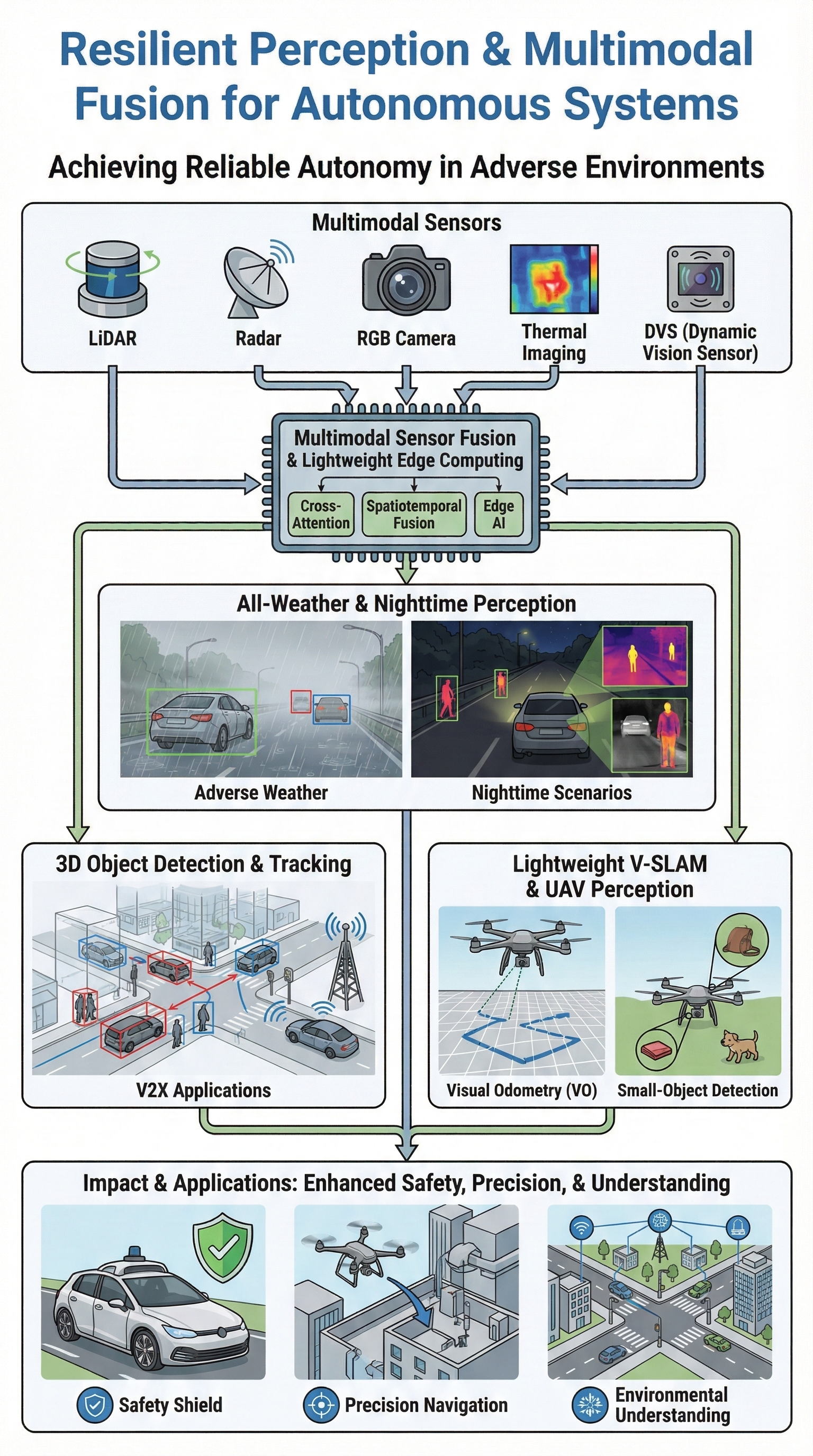

Resilient Perception & Multimodal Fusion

Achieving reliable autonomy in complex, dynamic, and unstructured environments. We develop robust perception architectures that function effectively under adverse weather opacity and low-light scenarios. Our strategy relies on multimodal sensor fusion (LiDAR, Radar, Camera, Thermal, DVS) to enhance safety and navigation precision for autonomous vehicles and UAVs.

Multimodal Sensor Fusion

Cross-Attention and Spatiotemporal architectures combining Camera, LiDAR, Radar, and Thermal data.

All-Weather Perception

Robust object detection algorithms designed specifically for rain, fog, and nighttime scenarios.

2D/3D Object Detection

High-precision 3D localization and tracking systems applied to autonomous driving and V2X.

UAV Perception

Efficient Visual Odometry (VO) and small-object detection optimized for drones.

Key Technologies

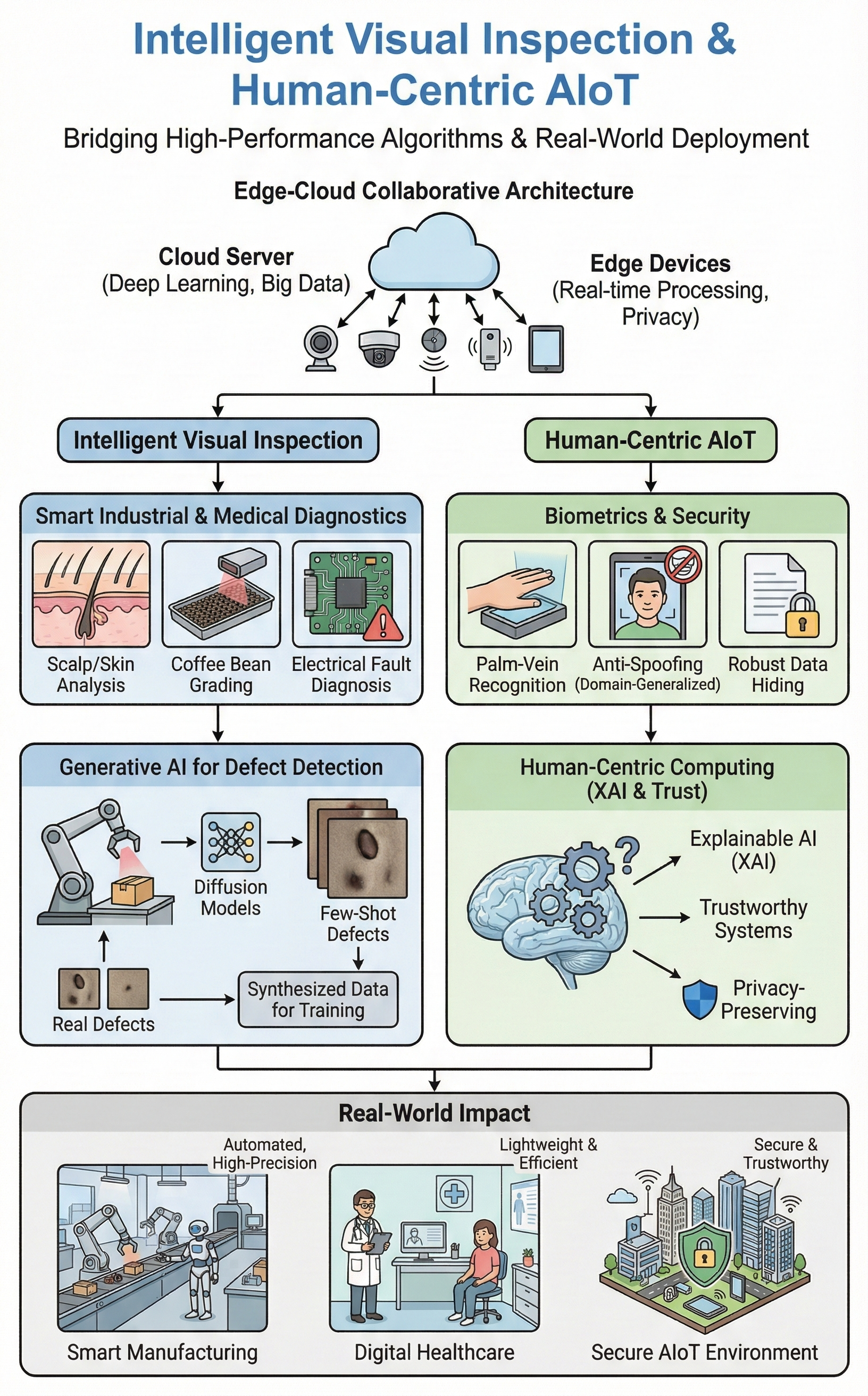

Intelligent Visual Inspection & AIoT

Bridging high-performance algorithms with real-world deployment. We specialize in Industrial Inspection and Healthcare Diagnostics, utilizing lightweight deep learning and domain adaptation to solve data scarcity. Our work in Human-Centric Computing prioritizes trust, privacy, and explainable AI (XAI).

Smart Diagnostics

Automated inspection for medical imaging (X-ray, dermatology) and industrial manufacturing.

Edge-Cloud Collaboration

Distributed frameworks optimizing real-time processing and privacy across edge devices.

Biometrics & Security

Advanced palm-vein recognition, face anti-spoofing, and robust data hiding techniques.

Generative Defect AI

Using Diffusion Models to synthesize defect samples for few-shot learning in manufacturing.

Key Technologies

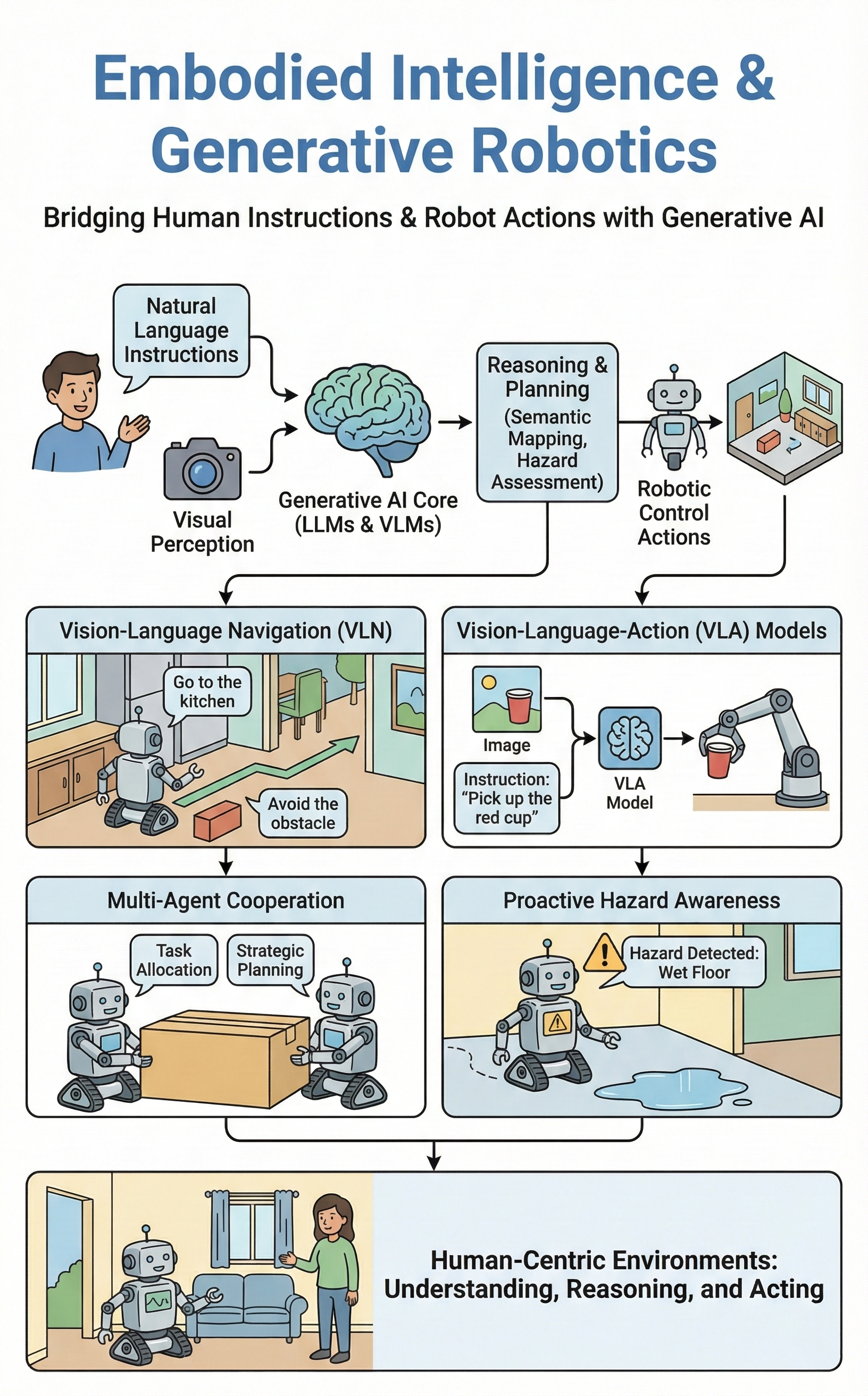

Embodied Intelligence & Generative Robotics

Closing the semantic gap between human instructions and robot actions. By integrating LLMs and VLMs with robotic control, we empower agents to perform natural language navigation, hazard assessment, and multi-agent cooperation in human-centric environments.

Vision-Language Nav

Semantic mapping and path planning based on natural language instructions (VLN).

VLA Models

End-to-end Vision-Language-Action models mapping inputs directly to robotic control.

Multi-Agent Systems

Using LLMs to orchestrate communication and strategy among multiple robotic agents.

Proactive Safety

Language-driven reasoning for identifying environmental risks and safe navigation.